Millennium®, since version 2007.02, has tried to reduce its proprietary middleware footprint through the use of IBM’s WebSphereMQ for client communications to the application nodes. This is often referred to as MQSS for WebSphereMQ Shared Services. It provides an enormous leap forward in throughput and performance for most Millennium users but has not lessened the need for Shared Service Proxies (SSPs), as was expected.

Millennium’s initial design specification for its proprietary middleware was 125 messages per second, which was pretty good when it was introduced 15 years ago. Plus it often saw higher throughput thanks to newer compilers, faster CPUs and some other changes. WebSphereMQ on version 5.3 for this sort of communication (often referred to as non-persistent queuing), however, was benchmarked at more than 3,200 transactions per second, and 6.2 is benchmarked at more than 3,800 tps, where each transaction is 2 kB in size.

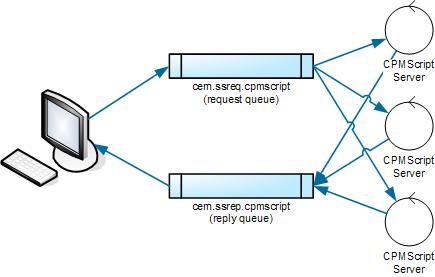

The diagram above gives a simple overview of how shared services work with IBM MQ. Starting at the left of the diagram, we have a client application that is connected to both a request and a reply queue. These queues are used to transport the client request to the application servers and the reply back to the client application.

If you are an AIX or HP-UX client, I recommend that you enable MQSS as quickly as possible to help your throughput. I wish I could say the same for VMS clients. Unfortunately, VMS experiences capacity issues with MQSS. Its TCP/IP stack and BG devices can exhaust their limit of 32,768 ports, at which point they no longer take new connections. Cerner now recommends that all VMS clients stop using MQSS, except for the Java services, which only know how to communicate via MQSS.

While all of this speed is great for clinicians and business staff using Millennium, there is a hitch. Updated Millennium configuration standards state that when MQSS is enabled, the number of SSPs (SCP Entry ID 1) should be reduced to four and that all dedicated SSPs should be disabled. Based on the client data I’ve seen, numerous clients who have gone live with MQSS with only four SSPs have experienced varying degrees of queuing, backlogs or slowdowns in the SSPs. I recently worked with a client who had more than 82 hours of queuing for the CPM Script Batch 001 service.

Their queuing was typical of many others: It was constant, not centered around normal usage patterns of one peak in the late morning and another in the early afternoon. The only way to mitigate this backlog is to increase the number of SSPs or create a dedicated SSP, stop all of those servers on the node and then restart them. This action will move the service to the new generic SSP or dedicated SSP and should stop the queuing. (To learn more about SSPs, read previous blogs on “SSP’s Path for Rapid Transactions” and “Setting up a Dedicated SSP.”)

This is a very surprising discovery. The Millennium switch to a commercial middleware like WebSphereMQ was supposed to eliminate this queuing. Millennium support might attribute the problem to the SSPs consuming resources that could be freed up for other processing. Although technically true, the impact of the SSPs is relatively small. An SSP starts with less than 16 MBs of RAM and can grow to about 128 MB of RAM. If I ran 16 SSPs that all grew to 128 MB (they often do not grow that large), I would be using the same amount of memory as any one of the Java servers (SCP Entry ID 351 through 370). Since the SSPs do not handle client communication — they don’t talk with PowerChart, FirstNet, PowerChart Office, etc. — they consume very little CPU. These processes do some disk I/O, but compared to CPM Script, SCP Entry ID 51 or the Java servers, it is such a small amount of I/O that it is really just background noise. The resource consumption, therefore, is too small compared to the rest of the Millennium application executables to have any significant impact to the resources of the node, lpar or vpar.

I still recommend that all non-VMS users switch to MQSS as soon as you can. The throughput gains are impressive. I just ask you not to reduce the SSPs to four. Although I don’t know your specific configuration needs, I would suggest you start somewhere in the range of 8-20. Eight was the old minimum recommended value for SSPs in non-production domains, and I have always recommended 20 as a base for any production domain.

Although VMS clients can’t benefit from MQSS, I do urge you to upgrade your system to make it as stable as possible and to ensure that you are able to use all 32,768 ports. Clients on VMS version 8.3 should upgrade to the appropriate VMS 8.3 with TCP/IP Services version 5.6 ECO 5 or later.

Prognosis: Even with MQSS’s welcome increases in throughput, SSPs still have a role in Millennium. Keeping them properly tuned will help prevent queuing in Millennium’s proprietary middleware.